Risk Assessment Tools

“What algorithmic risk assessment has done is reveal the inequality inherent in all prediction, forcing us to confront a much larger problem than the challenges of a new technology. Algorithms shed new light on an old problem.”1

The use of Risk Assessment Instruments (RAI) has become more popular as people in power try to find ways to decrease jail time, while still hoping to claim they are “tough on crime.” RAIs are purported to evaluate whether an individual should be released on bail or detained prior to trial. However, while these instruments are typically defended as streamlining the process for making pretrial decisions, they pose threats to civil liberties and may NOT result in the reduction of the prison population.

A government agency, such as a District Attorney’s office, can use risk assessment tools as a means to determine who gets bail and how much, who gets released pre-trial because they are deemed not to be a risk. However these assessment tools perpetuate the systems of white supremacy that has infected all facets of the criminal legal systems, such as policing, prosecutors, and courts. Risk Assessment Instruments in the pre-trial decision making process will never be able to fully account for the built-in biases that are inherent in this type of data. It’s as simple as racist data in, racist data out.

It’s not just the criminal legal system data that feeds the algorithms. RAI’s are increasingly using data about a person’s employment, education level, housing, healthcare, income, and community relationships to help make pre-trial decisions. In other words, a person’s life, liberty, and pursuit of happiness can be at the mercy of risk assessment tools that attempt to predict the future based on data that has built-in institutional racism and bias.

The Problem

“That order of operations can be problematic given the range of data that fuels the forecast. Data scientists often refer to this type of problem as “garbage in, garbage out.” In a historically biased criminal justice system, the “garbage in” can have grave consequences.”2

“Any system that relies on criminal justice data must contend with the vestiges of slavery, de jure and de facto segregation, racial discrimination, biased policing, and explicit and implicit bias, which are part and parcel of the criminal justice system. Otherwise, these automated tools will simply exacerbate, reproduce, and calcify the biases they are meant to correct.”3

- Defendant age

- Substance use

- Criminal history, including violence and failure to appear

- Active community supervision

- Pending/current charge(s)

- Employment stability

- Education

- Housing/residential stability

- Family/peer relationships

- Community ties

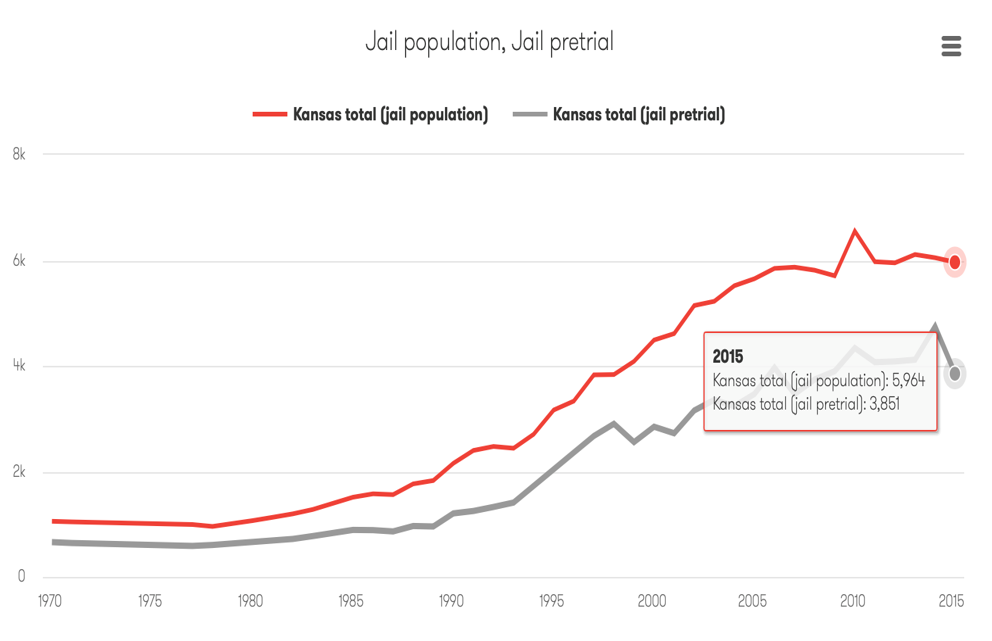

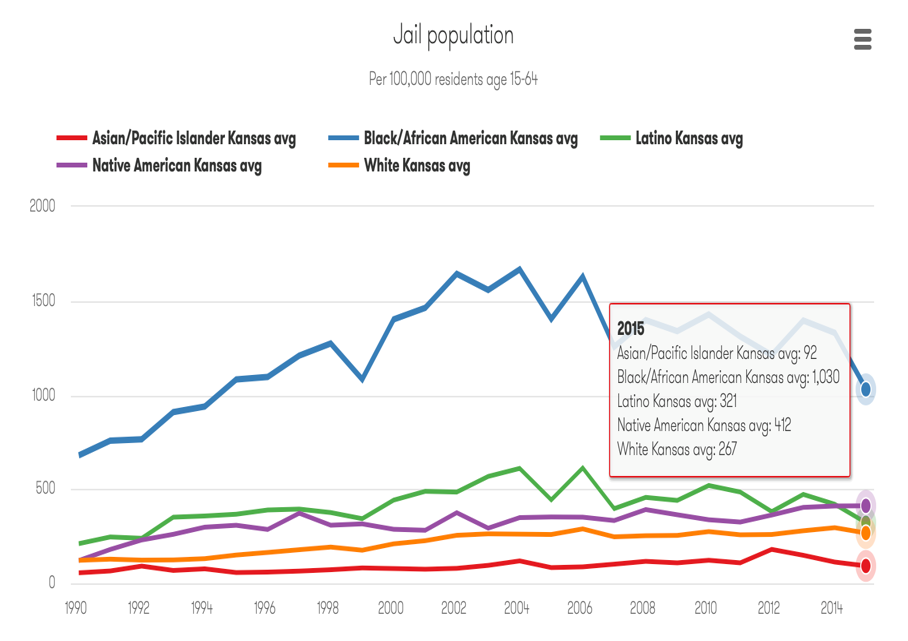

- Policing – traffic stops and searches, arrests, stop and frisk, broken windows policing, the drug war

- Jails and Prisons – In Kansas, Black people are about four times more likely to be incarcerated

- Prosecutors – discretion in bail, plea bargaining, what charges to bring, probation, juries and jury selection

- Courts – racial disparities in sentencing

- Healthcare - lack of access, insurance, mental health care, neo-natal care,

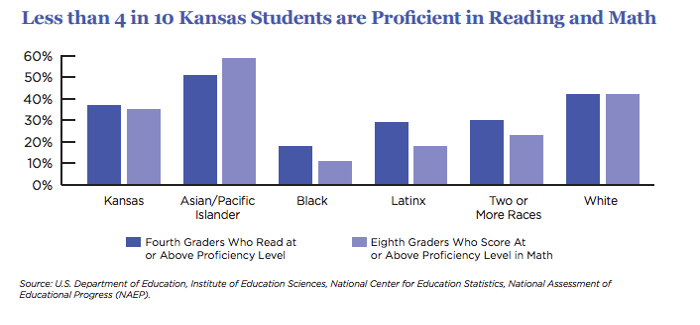

- Education – school discipline and suspensions and the school-to-prison pipeline

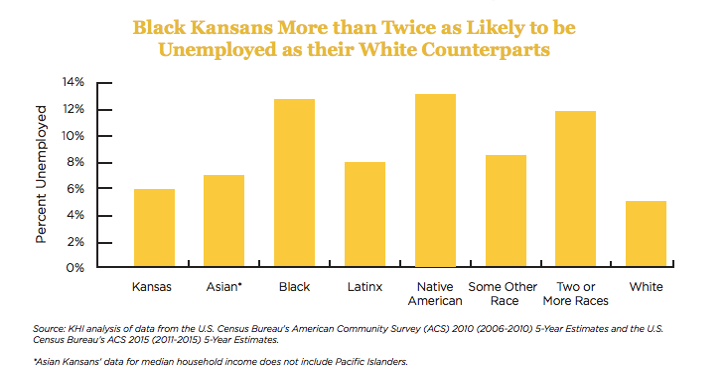

- Employment – disparities in unemployment rates, discrimination in hiring practices

Healthcare, Education, Employment, and Housing Data in Kansas